After figuring out how the TableCache works, I now want to dig a bit deeper into what the Tables are. That means that we are starting out in Table::Open. And this starts with:

So we start by reading the footer. Then we read the index block:

Construct a new table, and we are pretty much set. I went back to look at the TableBuilder, and I think that I am getting things better. There is the BlockHandle, which is basically just the offset / size in the file.

Then we have the actual index, which is located at the end of the file. This one has the format of:

key, offset, size

key, offset, size

key, offset, size

The only problem is that I don't see yet where this is actually used. And here it is, inside Table::InternalGet.

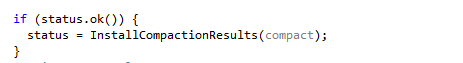

So we seek into the block data. And that matches pretty closely what I would expect here. And then we have this:

Some notes about this code, it looks like we are going to be loading the entire block into memory. But what happens if we have a very large value? For that matter, what happens if we have a very large value next to a very small value on the same block, but we wanted the small value?

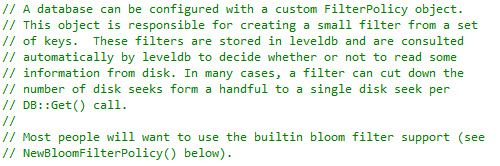

I think that I am wrong here. Looking at the code ,I found this comment, which I previously missed:

And that explains it really nicely with regards to how blocks works in general. The cool part happens inside Block::Iter::Seek, we first do a binary search for the prefixes that we have inside the block, and then a linear search inside the restart section. By default, there are 16 items per restart, so that is going to generate a really fast turnaround in general.

One key point I think that bear repeating is with regards to my previous comment about sizes. We don't actually ever copy the entire block to memory, instead, we rely on the OS to do so for us, because the files are actually memory mapped. That means that we can easily access them with very little cost, even if we have mixed big & small values on the same block. That is because we can just skip the large value and not touch it,and rely on the OS to page the right pages for us. That said, when reading a block, not just iterating it, we are going to allocate it in memory:

So I am not sure about that. I think that I was mistaken previously. The only problem is that the buf is actually used for scratch purposes. I think that this is right on both ends, looking at the code, we have PosixRandomAccessFile:

This is the standard approach, actually. Read it from the disk into the buffer, and go on with your life. But we also have PosixMMapReadableFile implementation:

And this is a lot more reasonable and in line with what I was thinking. We don't use the scratch at all.

However, we still need to allocate this scratch buffer on every single write. Looking at the code, the decision on what to allocate seems to be done here:

As you can see, it is mmap_limit_ that will make that determination. That is basically limiting us to no memory maps on 32 bits (make sense, the 2 GB virtual address space is really small) and to a max of 1,000 mapped files for 64 bits. Given that I am assuming that you are going to run this on 64 bits a lot more often than on 32 bits (at least for server apps), it would make more sense...

Stopping right here. Leveldb is being used in the browser as well, and presumably on mobile devices, etc. That means that you can't really assume/require 64 bits. And given that most of the time, you are going to have blocks of up to 4 KB in size (except if you have very large keys), I think that this is reasonable. I would probably have done away with allocating the buffer in the happy case, but that is beside the point, probably, since most of the time I assume that the value are small enough.

I am looking at this through the eyes of someone who deals with larger values all the time, so it triggers a lot of introspection for me. And this is how we actually read a value from disk, I actually managed to also figure out how we write, and in what format. All together good day's (actually, it was mostly at night) fun.

![]() .

.