That is my memory you’re freeing, you foreign thread!

RavenDB is a pretty big project, and it has been around for quite a while. That means that we have run into a lot of strange stuff over the years. In particular, support incidents are something that we track and try to learn from. Today’s post is about one such lesson. We want to be able to track, on a per thread basis, how much memory is in use. Note that when we say that, we talk about unmanaged memory.

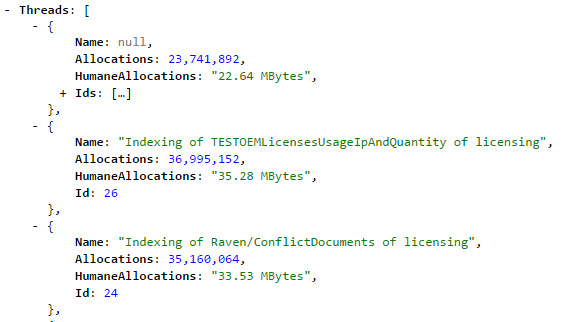

The idea is, once we track it, we can manage it. Here is one such example:

Note that this has already paid for itself when it showed us very clearly (and without using special tools), exactly who is allocating too much memory.

Memory allocation / de-allocation is often a big performance problem, and we are trying very hard to not get painted into performance corners. So a lot of our actual memory usage is allocate once, then keep around in the thread for additional use. This turn out to be quite useful. It also means that for the most part, we really don’t have to worry about thread safety. Memory allocations happen in the context of a thread, and are released to the thread once an operation is done.

This gives us high memory locality and it avoids having to take locks to manage memory. Which is great, except that we also have quite a bit of async request processing code. And async request processing code will quite gladly jump threads for you.

So that lead to a situation where you allocate memory in thread #17 at the beginning of the request and it waits for I/O, so when it finally completes, the request finish processing in thread #29. In this case, we keep the memory we go for next usage in the finishing thread. This is based on the observation that we typically see the following patterns:

- Dedicated threads for tasks, that do no thread hopping, each have unique memory usage signature, and will eventually settle into the memory it needs to process everything properly.

- Pools of similar threads that share roughly the same tasks with one another, and have thread hopping. Over time, things will average out and all threads will have roughly the same amount of memory.

That is great, but it does present us with a problem, how do we account for that? If thread #17 allocated some memory, and it is now sitting in thread #29’s bank, who is charged for that memory?

The answer is that we always charge the thread that initially allocated the memory, even if it currently doesn’t have that memory available. This is because it is frequently the initial allocation that we need to track, and usage over time just means that we are avoiding constant malloc/free calls.

It does present a problem, what happens if thread #29 is freeing memory that belongs to thread #17? Well, we can just decrement the allocated value, but that would force us to always do threads safe operations, which are more expensive.

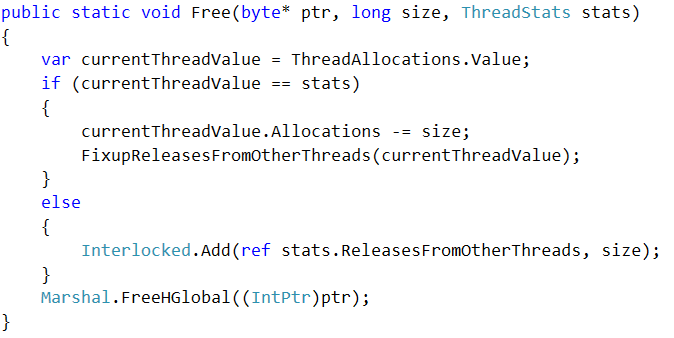

Instead, we do this:

If the freeing thread is the same as the allocation thread, just use simple subtraction, crazy cheap. But if it was allocated from another thread, do the thread safe thing. Then we smash both values together to create the final, complete, picture.

Comments

Comment preview