NHibernate Perf Tricks

I originally titled this post NHibernate Stupid Perf Tricks, but decided to remove that. The purpose of this post is to show some performance optimizations that you can take advantage of with NHibernate. This is not a benchmark, the results aren’t useful for anything except comparing to one another. I would also like to remind you that NHibernate isn’t intended for ETL scenarios, if you desire that, you probably want to look into ETL tools, rather than an OR/M developed for OLTP scenarios.

There is a wide scope for performance improvements outside what is shown here, for example, the database was not optimized, the machine was used throughout the benchmark, etc.

To start with, here is the context in which we are working. This will be used to execute the different scenarios that we will execute.

The initial system configuration was:

<hibernate-configuration xmlns="urn:nhibernate-configuration-2.2"> <session-factory> <property name="dialect">NHibernate.Dialect.MsSql2000Dialect</property> <property name="connection.provider">NHibernate.Connection.DriverConnectionProvider</property> <property name="connection.connection_string"> Server=(local);initial catalog=shalom_kita_alef;Integrated Security=SSPI </property> <property name='proxyfactory.factory_class'> NHibernate.ByteCode.Castle.ProxyFactoryFactory, NHibernate.ByteCode.Castle </property> <mapping assembly="PerfTricksForContrivedScenarios" /> </session-factory> </hibernate-configuration>

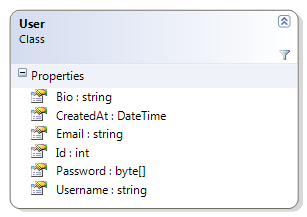

The model used was:

And the mapping for this is:

<class name="User" table="Users"> <id name="Id"> <generator class="hilo"/> </id> <property name="Password"/> <property name="Username"/> <property name="Email"/> <property name="CreatedAt"/> <property name="Bio"/> </class>

And each new user is created using:

public static User GenerateUser(int salt) { return new User { Bio = new string('*', 128), CreatedAt = DateTime.Now, Email = salt + "@example.org", Password = Guid.NewGuid().ToByteArray(), Username = "User " + salt }; }

Our first attempt is to simply check serial execution speed, and I wrote the following (very trivial) code to do so.

const int count = 500 * 1000; var configuration = new Configuration() .Configure("hibernate.cfg.xml"); new SchemaExport(configuration).Create(false, true); var sessionFactory = configuration .BuildSessionFactory(); var stopwatch = Stopwatch.StartNew(); for (int i = 0; i < count; i++) { using(var session = sessionFactory.OpenSession()) using(var tx = session.BeginTransaction()) { session.Save(GenerateUser(i)); tx.Commit(); } } Console.WriteLine(stopwatch.ElapsedMilliseconds);

Note that we create a separate session for each element. This is probably the slowest way of doing things, since it means that we significantly increase the number of connections open/close and transactions that we need to handle.

This is here to give us a base line on how slow we can make things, to tell you the truth. Another thing to note that this is simply serial. This is just another example of how this is not a true representation of how things happen in the real world. In real world scenarios, we are usually handling small requests, like the one simulated above, but we do so in parallel. We are also using a local database vs. the far more common remote DB approach which skew results ever furhter.

Anyway, the initial approach took: 21.1 minutes, or roughly a row every two and a half milliseconds, about 400 rows / second.

I am pretty sure most of that time went into connection & transaction management, though.

So the first thing to try was to see what would happen if I would do that using a single session, that would remove the issue of opening and closing the connection and creating lots of new transactions.

The code in question is:

using (var session = sessionFactory.OpenSession()) using (var tx = session.BeginTransaction()) { for (int i = 0; i < count; i++) { session.Save(GenerateUser(i)); } tx.Commit(); }

I expect that this will be much faster, but I have to explain something. It is usually not recommended to use the session for doing bulk operations, but this is a special case. We are only saving new instances, so the flush does no unnecessary work and we only commit once, so the save to the DB is done in a single continuous stream.

This version run for 4.2 minutes, or roughly 2 rows per millisecond about 2,000 rows / second.

Now, the next obvious step is to move to stateless session, which is intended for bulk scenarios. How much would this take?

using (var session = sessionFactory.OpenStatelessSession()) using (var tx = session.BeginTransaction()) { for (int i = 0; i < count; i++) { session.Insert(GenerateUser(i)); } tx.Commit(); }

As you can see, the code is virtual identical. And I expect the performance to be slightly improved but on par with the previous version.

This version run at 2.9 minutes, about 3 rows per millisecond and close to 2,800 rows / second.

I am actually surprised, I expected it to be faster, but it was much faster.

There are still performance optimizations that we can make, though. NHibernate has a rich batching system that we can enable in the configuration:

<property name='adonet.batch_size'>100</property>

With this change, the same code (using stateless sessions) runs at: 2.5 minutes and at 3,200 rows / second.

This doesn’t show as much improvement as I hoped it would. This is an example of how a real world optimization is actually failing to show its promise in a contrived example. The purpose of batching is to create as few remote calls as possible, which dramatically improve performance. Since we are running on a local database, it isn’t as noticeable.

Just to give you some idea about the scope of what we did, we wrote 500,000 rows and 160MB of data in a few minutes.

Now, remember, those aren’t numbers you can take to the bank, their only usefulness is to know that by a few very simple acts we improved performance in a really contrived scenario by 90% or so. And yes, there are other tricks that you can utilize (preparing commands, increasing the batch size, parallelism, etc). I am not going to try to outline then, though. For the simple reason that performance should be quite enough for everything who is using an OR/M. That bring me back to me initial point, OR/M are not about bulk data manipulations, if you want to do that, there are better methods.

For the scenario outlined here, you probably want to make use of SqlBulkCopy, or the equivalent for doing this. Just to give you an idea about why, here is the code:

var dt = new DataTable("Users"); dt.Columns.Add(new DataColumn("Id", typeof(int))); dt.Columns.Add(new DataColumn("Password", typeof(byte[]))); dt.Columns.Add(new DataColumn("Username")); dt.Columns.Add(new DataColumn("Email")); dt.Columns.Add(new DataColumn("CreatedAt", typeof(DateTime))); dt.Columns.Add(new DataColumn("Bio")); for (int i = 0; i < count; i++) { var row = dt.NewRow(); row["Id"] = i; row["Password"] = Guid.NewGuid().ToByteArray(); row["Username"] ="User " + i; row["Email"] = i + "@example.org"; row["CreatedAt"] =DateTime.Now; row["Bio"] = new string('*', 128); dt.Rows.Add(row); } using (var connection = ((ISessionFactoryImplementor)sessionFactory).ConnectionProvider.GetConnection()) { var s = (SqlConnection)connection; var copy = new SqlBulkCopy(s); copy.BulkCopyTimeout = 10000; copy.DestinationTableName = "Users"; foreach (DataColumn column in dt.Columns) { copy.ColumnMappings.Add(column.ColumnName, column.ColumnName); } copy.WriteToServer(dt); }

And this ends up in 49 seconds, or about 10,000 rows / second.

Use the appropriate tool for the task.

But even so, getting to 1/3 of the speed of SqlBulkCopy (the absolute top speed you can get to) is nothing to sneeze at.

Comments

Spot on! Use the right tool for the task!!!! Haha this post is sort of the discussion ender. There is nothing more to say on the subject. You can't argue with this. :)

Unfortunately, I can:

So by this post, Oren, you has confirmed our tests for NH are near-optimal. We use almost identical code.

We shows our performance is 2 times higher, or just 1.5 times slower than SqlBulkCopy. And, as I've mentioned, today I'll explain how to get even higher performance (I expect we'll get ~ at least 15-20% more) in my blog ( http://blog.dataobjects.net ).

I think being even 1.5 times slower than SqlBulkCopy is more than good acceptable for complete storage independence.

And finally, I explained many many times why we don't test SqlBulkCopy: ormbattle.net/.../...stead-of-common-in-tests.html . Think if this is related to ORM at all.

Finally, I think you must say something exact about a kind of bet I proposed to you here: ormbattle.net/.../...i-dont-believe-oren-eini.html - I won't simply forget this.

"good acceptable" => "acceptable".

P.S. Thanks a lot for spending your time to finally investigate this!

Since when is bashing the competition a valid sales technique? The only thing is that you lose respect from potential customers. If this new tool is indeed so much better (in all aspects, because I only hear performance arguments, which is absolutely not the most important thing for an ORM) then the public will decide that for itself.

I also don't understand why Ayende is spending so much time on these silly things, why not ignore it than a lot less people would even know about it, and it is not like you can ever change these persons mind.

@ Mark: ormbattle.net/.../...t-is-a-biased-test-suite.html

@Alex how does that link explain why basing competition is good and shows me that you are talking about anything else than performance?

Sorry, "topic links".

Alex Yakunin,

I removed the links comment.

As an aside, please be aware that all the links in the comments include rel="nofollow", so they don't result in any google joice.

@Alex hmm let me try to rephrase my questions: how do these links explain why basing competition is good and shows me that you are talking about anything else than performance?

Alex Yakunin,

You also seemed to missed the point of this post. Have you seen the picture?

I get a "Reported Attack Site!" warning when navigating ormbattle.net. Apparently Google was offered a trojan there a few days ago. Gotta love Russia.

Yes... It was infected by a virus right after launch - our developers had forgot to tune up the security properly. We resolved the issue almost immediately, but Google still remembers this, although the site is safe now.

I'd appreciate any ideas on how to fix this.

Alex, is the source code of the benchmark itself publicly available? Even if we can argue all year long about how useful the benchmark itself is, there might be some people interested in actually profiling the frameworks to see where the 'bottleneck' is.

@ Oren: Which one? With hummer? Yes. If you're talking about this picture, I can only repeat the same ("I explained many many times why we don't test SqlBulkCopy"). I agree with your point: appropriate tool must be used for bulk insertions. But I wrote many many times in fact we didn't measure perf. of bulk insertions.

Ok, 100 insertions can be considered as bulk insertion operation (think about many-to-many rel. operations)? 10 insertions? Note that exact number does not matter much for the purpose of this test.

Btw, you still didn't answer on my "bet". Sorry for pushing on you, but since you're criticizing me publicly, I think you must follow the same rules here as well.

Alex,

Your tests are all about running queries in a loop.

Whenever you call the DB in a loop, it is a bug.

Take a look at the fallacies of distributed computing to understand exactly why.

As for your bet, assuming you mean batching, it is meaningless. NH has this for 4 years.

Yes: http://code.google.com/p/ormbattle/downloads/list

If you need access to source code repository (there is most current test code), please write to info @ ormbattle.net.

Well, I promised to describe our own batching & related techniques, and I'm writing the post about this ;) So I'm talking about the ideas I'm going to share.

I don't care about ADO.NET batching - i.e. obviously, I knew it exists, and I didn't mean it.

Moreover, I also wrote about materialization speed. If current materialization speed of NH is good enough, just say you won't optimize this further, and confirm that our results are meaningless in real life in action.

Ayende, i like reading your blog very much but please please stop blogging about performance and benchmark for a while. I am really tired of seeing this one special face here over and over again. I miss blogs like the one about the erlang stuff. I would like to read something about the Axum Incubation Project, F# or maybe about the sqlalchemy orm for pyhon. it should work with ironpython now. What is possible with an orm running on the DLR compared to CLR ones? Or is it more fun to use NH on ironpython ? Thanks for your very informative blogs. I am 20 years in the IT business and learn something knew with every post from you. I really enjoy it ...but not this alex stuff...

chris +1

I don't think this company and its advertisements are worth your energy.

The washing powder of the advertising company always washes cleaner than the "regular washing powder".

I agree with Chris. I don't think anybody who has common sense will be ditching NHibernate for the ORMBattle ORM just because of some basic benchmarks.

I would much rather read about the Macto project progress, non-SQL databases, etc.

Alex,

You miss a very important point.

I have no interest in participating in your company's marketing ploys.

I can totally understand why you don't want to take this guys crap lying down, but i have to +1 as well - this post is a slam dunk. There just is no debating this fool if this doesn't make him realize the fallacy of his premise.

The picture of the "hummer" sums it up pretty nicely :)

I think this post is helpful. Still is there any emptor caveat in using a stateless session? Would NHibernate Prof help guide me in anyway?

On a side note, I personally would like Alex to go away. He isn't adding anything useful to the mix. I vote to ban him from this site.

I don't know what exactly Alex tried to achieve but I can say with most certainty: not me, nor my company will EVER even consider their ORM tool no matter how good it is.

firefly,

IStatelessSession is a really tricky one. I'd say: if you need large batching operations and NHibernate performance doesn't cut it for you, go for raw ADO.NET batching instead as Oren mentioned.

Alex I'm wondering, even if your orm does these kind of operations faster, the whole point is.. an orm isn't a batch processor, you can get as excited as you want that your orm is king of batching, but put in the ring with batching systems your orm won't stand a chance.

These sort of benchmarks just don't show a reality, maybe the reality is that your orm is faster, but you aren't convincing anyone with those kinda tests.. as you can see you are just alienating yourself, and I don't buy the argument that any press is good, not in such a professional environment.

I really like your screwhammer picture! It really sums up what this whole 'techie soap' is about.

Ayende

There have been times in the past when I have made the mistake of designing "long and open" sessions, and leveraging NHibernate for ETL.

As an API user I assumed these would be realistic things to do. Maybe it was my own ignorance, but do you know of any place that helps describe when NHibernate is NOT a good idea?

@Firefly,

IStatelessSession is mostly for just such tasks, when you want to go through a lot of data very fast.

In essence, it is a shortcut through NHibernate, taking care of only very few things in order to speed things up.

NH Prof can certainly track stateless sessions, yes

@Ray,

The fun of using IStatelessSession is that you don't need to worry about hyrdrating your entities, you can take advantage of NH's mapping, database independent, etc.

@Pete

Right there in the NHibernate documentation :-)

http://nhforge.org/doc/nh/en/index.html#batch

thanks!

My thingy is faster then your thingy blah blah blah...

Dont forget mySQL was fast too, until they started implementing features.

@ Stephen: I'm curious, what is batching system. Is this related to databases / ORM tools at all?

I wrote about batching because, as tests show, many ORM have this feature, and, argue you or not, it is important. Batching in ORM appeared much earlier than e.g. these tests.

So I don't understand your point.

Well, this isn't our case, believe me ;)

@Alex,

You d better relax man. I really think Ayende should just ban you on his blog. The reason he haven't done this yet is that he is a respectful person. But you simply use others person blog for promotion that f..ing dirty. IMHO, nobody will take your product for serious for couple of reasons:

No tricks will force me to prefer a well known, open source, time proof, well supported system (NH) to a commercial product done by few guys from Ural. I am not that crazy.

The only company phone number I found on the web site is the cell which belongs to you personally (Megafon - Ural - cheapest russian network) - ridiculous

Your promotion is really dirty, you gain no respect from community. So I think your customer relation is the same.

I doubt that your company has any idea about delivery management. All that I see is that you keep saying - we fix this immediately. So I expect that the product lacks code coverage and so on.

Not to beat a dead horse to death, but there seems to be some reasonable folks out there doing comparisons.

I just ran across this:

gregdoesit.com/.../nhibernate-vs-entity-framewo...

and basically the bottom line is: choose an ORM for the features, and don't worry about performance, since the tests aren't real world anyways.

@LEXX

U d better show at least some respect. Estimating orm tools from the phone numbers and geographical coordinates - unheard stupidity for my money. BTW I hope Ayende doesnt need any support from such emo boys. Anyway - that's ok! What is not ok is that LEXX represents the general level of community here - agressive, arrogant and disrespectful to other points of view.

No need to wonder if most of the guys bashing Alex have ever given a try to his tool.

Nicer and nicer:

Yep, 6-person team x 2.5 years. Now honestly compare this to NH team.

Phone & fax: +7 343 263 7174 (general), sales: +7 922 222 7300

Yes. That's my phone, and I never hide this.

Definitely not cheapest.

I'd say, clever. Our working hours significantly differ from Europe and US, that's why I leave my cell phone. And yes, I answer even at 4AM.

So do you see anything that wasn't fixed? ;)

Actually I couldn't assume such ... even exist.

~ 75%, I'll provide full info shortly. That's lower than code coverage of NH, but definitely not significantly: stackoverflow.com/.../is-there-any-info-on-nhib...

Btw, NH has really good code coverage. But what about the others? Frankly speaking, I was unable to find any coverage info about other ORM tools at all.

So, @LEXX... Your post is definitely uber-fail here ;)

Alex, I believe you're a good guy, with honest intentions and good product. But I also believe that you are doing yourself and your product a big disservice by crusading here. You obviously struck a nerve with Ayende, and vice versa, but stop this now, before it's too late.

for those of you who still don't know what the only intention of alex here is read this book:

Positioning: The Battle for Your Mind, 20th Anniversary Edition

...Positioning describes a revolutionary approach to creating a "position" in a prospective customer's mind that reflects a company's own strengths and weaknesses as well as those of its competitors...

@ALL: if you keep commenting alex posts he will continue posting and posting. Just stop doing this and in a while he will be forgotten....

Although it isn't related to the subject, the book is really good.

I'd be glad, but you comment and comment ;)

Thanks ayende for this blog. NHibernate is giving me performance issues even on the most trivial of tasks, so I hope what you put in this article will help me. Regardless of how powerful and easy an ORM tool can make your life, peformance will always be at the top of the list. Great will be the day when an ORM can peform very close to straight ADO.Net. I find all your posts informative. Keep it up.

Goku,

That hasn't been my experience, moreover, I can tell you that often, NH out performs hand rolled data access layers.

Comment preview