Recently we had to tackle a seriously strange bug. A customer reported that under a specific set of circumstances, when loading the database with many concurrent requests, they would get an optimistic concurrency violation from RavenDB.

That is the sort of errors that we look at and go: “Well, that is what you expect it to do, no?”

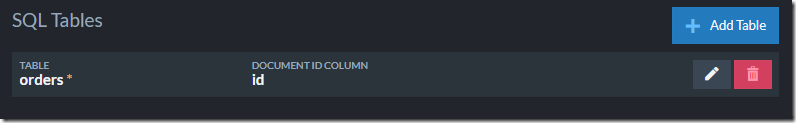

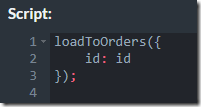

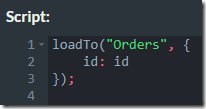

The customer code looked something like this:

As you can imagine, this code is expected to deal with concurrency errors, since it is explicitly meant to run from multiple threads (and processes) all at once.

However, in the scenario that the user was using, it had two separate types of workers running, one type was accessing the same set of locks, and there you might reasonably expect to get a concurrency error. However, the second type was run in the main thread only, and there should be no contention on that at all. However, the user was getting a concurrency error on that particular lock, which shouldn’t happen.

Looking at the logs, it was even more confusing. Leaving aside that we could only reproduce this issue when we had a high contention rate, we saw some really strange details. Somehow, we had a write to the document coming out of nowhere?

It took a while to figure out what was going on, but we finally figured out what the root cause was. RavenDB internally does not execute transactions independently. That would be far too costly. Instead, we use a process call transaction merging.

Here is what this looks like:

The idea is that we need to write to the disk to commit the transaction. That is an expensive operation. By merging multiple concurrent transactions in this manner, we are able to significantly reduce the number of disk writes, increasing the overall speed of the system.

That works great, until you have an error. The most common error, of course, is a concurrency error. At this point, the entire merged transaction is rolled back and we will execute each transaction independently. In this manner, we suffer a (small) performance hit when running into such an error, but the correctness of the system is preserved.

The problem in this case would only happen when we had enough load to start doing transaction merging. Furthermore, it would only happen if the actual failure would happen on the second transaction in the merged sequence, not the first one.

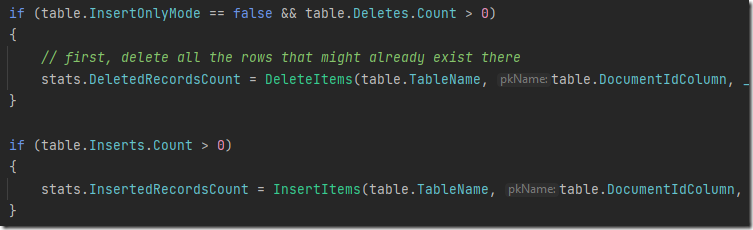

Internally, each transaction does something like this:

Remember that aside from merging transactions, a single transaction in RavenDB can also have multiple operations. Do you see the bug here?

The problem was that we successfully executed the transaction batch, but the next one in the same transaction failed. RavenDB did the right thing and execute the transaction batch again independently (this is safe to do, since we rolled back the previous transaction and are operating on a clean slate).

The problem is that the transaction batch itself, on the other hand, had its own state. In this case, the problem was that when we replied back to the caller, we used the old state, the one that came from the old transaction that was rolled back.

The only state we return to the user is the change vector, which is used for concurrency checks. What happened was that we got the right result, for the old change vector. Everything worked, and the actual state of the database was fine. The only way to discover this issue is if you are continue to make modifications to the same document on the same session. That is a rare scenario, since you typically discard the session after you save its changes.

In any other scenario, you’ll re-load the document from the server, which will give you the right change vector and make everything work.

Like all such bugs, when we look back at it, this is pretty obvious, but to get to this point was quite a long road.