In the previous post, we looked into what it would take to reduce the cost of filtering negative numbers. We got into the assembly and analyzed exactly what was going on. In terms of this directly, I don’t think that even hand-optimized assembly would take us further. Let’s see if there are other options that are available for us to get better speed.

The first thing that pops to mind here is to do a loop unrolling. After all, we have a very tight loop, if we can do more work per loop iteration, we might get better performance, no? Here is my first version:

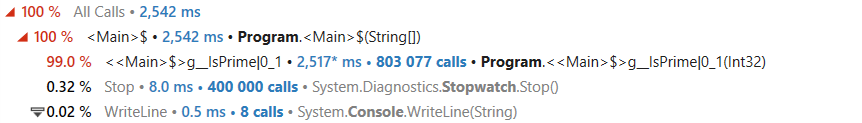

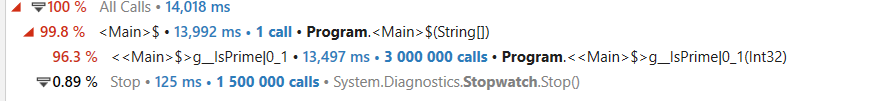

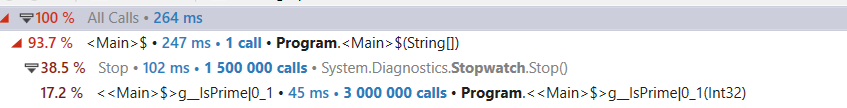

And here are the benchmark results:

| Method | N | Mean | Error | StdDev | Ratio | Code Size |

|---|---|---|---|---|---|---|

| FilterCmp | 23 | 274.6 ns | 0.40 ns | 0.35 ns | 1.00 | 411 B |

| FilterCmp_Unroll | 23 | 257.5 ns | 0.94 ns | 0.83 ns | 0.94 | 606 B |

| FilterCmp | 1047 | 748.1 ns | 2.91 ns | 2.58 ns | 1.00 | 411 B |

| FilterCmp_Unroll | 1047 | 702.5 ns | 5.23 ns | 4.89 ns | 0.94 | 606 B |

| FilterCmp | 1048599 | 501,545.2 ns | 4,985.42 ns | 4,419.45 ns | 1.00 | 411 B |

| FilterCmp_Unroll | 1048599 | 446,311.1 ns | 3,131.42 ns | 2,929.14 ns | 0.89 | 606 B |

| FilterCmp | 33554455 | 29,637,052.2 ns | 184,796.17 ns | 163,817.00 ns | 1.00 | 411 B |

| FilterCmp_Unroll | 33554455 | 29,275,060.6 ns | 145,756.53 ns | 121,713.31 ns | 0.99 | 606 B |

That is quite a jump, 6% – 11% savings is no joke. Let’s look at what is actually going on at the assembly level and see if we can optimize this further.

As expected, the code size is bigger, 264 bytes versus the 55 we previously got. But more importantly, we got the range check back, and a lot of them:

The JIT isn’t able to reason about our first for loop and see that all our accesses are within bounds, which leads to doing a lot of range checks, and likely slows us down. Even with that, we are still showing significant improvements here.

Let’s see what we can do with this:

With that, we expect to have no range checks and still be able to benefit from the unrolling.

| Method | N | Mean | Error | StdDev | Ratio | RatioSD | Code Size |

|---|---|---|---|---|---|---|---|

| FilterCmp | 23 | 275.4 ns | 2.31 ns | 2.05 ns | 1.00 | 0.00 | 411 B |

| FilterCmp_Unroll | 23 | 253.6 ns | 2.59 ns | 2.42 ns | 0.92 | 0.01 | 563 B |

| FilterCmp | 1047 | 741.6 ns | 5.95 ns | 5.28 ns | 1.00 | 0.00 | 411 B |

| FilterCmp_Unroll | 1047 | 665.5 ns | 2.38 ns | 2.22 ns | 0.90 | 0.01 | 563 B |

| FilterCmp | 1048599 | 497,624.9 ns | 3,904.39 ns | 3,652.17 ns | 1.00 | 0.00 | 411 B |

| FilterCmp_Unroll | 1048599 | 444,489.0 ns | 2,524.45 ns | 2,361.38 ns | 0.89 | 0.01 | 563 B |

| FilterCmp | 33554455 | 29,781,164.3 ns | 361,625.63 ns | 320,571.70 ns | 1.00 | 0.00 | 411 B |

| FilterCmp_Unroll | 33554455 | 29,954,093.9 ns | 588,614.32 ns | 916,401.59 ns | 1.01 | 0.04 | 563 B |

That helped, by quite a lot, it seems, for most cases, the 32M items case, however, was slightly slower, which is quite a surprise.

Looking at the assembly, I can see that we still have branches, like so:

And here is why this is the case:

Now, can we do better here? It turns out that we can, by using a branchless version of the operation. Here is another way to write the same thing:

What happens here is that we are unconditionally setting the value in the array, but only increment if the value is greater than or equal to zero. That saves us in branches and will likely result in less code. In fact, let’s see what sort of assembly the JIT will output:

What about the performance? I decided to pit the two versions (normal and branchless) head to head and see what this will give us:

| Method | N | Mean | Error | StdDev | Ratio | Code Size |

|---|---|---|---|---|---|---|

| FilterCmp_Unroll | 23 | 276.3 ns | 4.13 ns | 3.86 ns | 1.00 | 411 B |

| FilterCmp_Unroll_Branchleses | 23 | 263.6 ns | 0.95 ns | 0.84 ns | 0.96 | 547 B |

| FilterCmp_Unroll | 1047 | 743.7 ns | 9.41 ns | 8.80 ns | 1.00 | 411 B |

| FilterCmp_Unroll_Branchleses | 1047 | 733.3 ns | 3.54 ns | 3.31 ns | 0.99 | 547 B |

| FilterCmp_Unroll | 1048599 | 502,631.1 ns | 3,641.47 ns | 3,406.23 ns | 1.00 | 411 B |

| FilterCmp_Unroll_Branchleses | 1048599 | 495,590.9 ns | 335.33 ns | 297.26 ns | 0.99 | 547 B |

| FilterCmp_Unroll | 33554455 | 29,356,331.7 ns | 207,133.86 ns | 172,966.15 ns | 1.00 | 411 B |

| FilterCmp_Unroll_Branchleses | 33554455 | 29,709,835.1 ns | 86,129.58 ns | 71,922.10 ns | 1.01 | 547 B |

Surprisingly enough, it looks like the branchless version is very slightly slower. That is a surprise, since I would expect reducing the branches to be more efficient.

Looking at the assembly of those two, the branchless version is slightly bigger (10 bytes, not that meaningful). I think that the key here is that there is a 0.5% chance of actually hitting the branch, which is pretty low. That means that the branch predictor can likely do a really good job and we aren’t going to see any big benefits from the branchless version.

That said… what would happen if we tested that with 5% negatives? That difference in behavior may cause us to see a different result. I tried that, and the results were quite surprising. In the case of the 1K and 32M items, we see a slightl cost for the branchless version (additional 1% – 4%) while for the 1M entries there is an 18% reduction in latency for the branchless version.

I ran the tests again with a 15% change of negative, to see what would happen. In that case, we get:

| Method | N | Mean | Error | StdDev | Ratio | RatioSD | Code Size |

|---|---|---|---|---|---|---|---|

| FilterCmp_Unroll | 23 | 273.5 ns | 3.66 ns | 3.42 ns | 1.00 | 0.00 | 537 B |

| FilterCmp_Unroll_Branchleses | 23 | 280.2 ns | 4.85 ns | 4.30 ns | 1.03 | 0.02 | 547 B |

| FilterCmp_Unroll | 1047 | 1,675.7 ns | 29.55 ns | 27.64 ns | 1.00 | 0.00 | 537 B |

| FilterCmp_Unroll_Branchleses | 1047 | 1,676.3 ns | 16.97 ns | 14.17 ns | 1.00 | 0.02 | 547 B |

| FilterCmp_Unroll | 1048599 | 2,206,354.4 ns | 6,141.19 ns | 5,444.01 ns | 1.00 | 0.00 | 537 B |

| FilterCmp_Unroll_Branchleses | 1048599 | 1,688,677.3 ns | 11,584.00 ns | 10,835.68 ns | 0.77 | 0.01 | 547 B |

| FilterCmp_Unroll | 33554455 | 205,320,736.1 ns | 2,757,108.01 ns | 2,152,568.58 ns | 1.00 | 0.00 | 537 B |

| FilterCmp_Unroll_Branchleses | 33554455 | 199,520,169.4 ns | 2,097,285.87 ns | 1,637,422.86 ns | 0.97 | 0.01 | 547 B |

As you can see, we have basically the same cost under 15% negatives for small values, a big improvement on the 1M scenario and not much improvement on the 32M scenario.

All in all, that is very interesting information. Digging into the exact why and how of that means pulling a CPU instruction profiler and starting to look at where we have stalls, which is a bit further that I want to invest in this scenario.

What if we’ll try to rearrange the code a little bit. The code looks like this (load the value and AddToOutput() immediately):

AddToOutput(ref itemsRef, Unsafe.Add(ref itemsRef, i + 0));

What if we split it a little bit, so the code will look like this:

The idea here is that we are trying to get the JIT / CPU to fetch the items before they are actually needed, so there would be more time for the memory to arrive.

Remember that for the 1M scenario, we are dealing with 8MB of memory and for the 32M scenario, we have 256MB. Here is what happens when we look at the loop prolog, we can see that it is indeed first fetching all the items from memory, then doing the work:

In terms of performance, that gives us a small win (1% – 2% range) for the 1M and 32M entries scenario.

The one last thing that I wanted to test is if we’ll unroll the loop even further, what would happen if we did 8 items per loop, instead of 4.

There is some improvement, (4% in the 1K scenario, 1% in the 32M scenario) but also slowdowns (2% in the 1M scenario).

I think that this is probably roughly the end of the line as far as we can get for scalar code.

We already made quite a few strides in trying to parallelize the work the CPU is doing by just laying out the code as we would like it to be. We tried to control the manner in which it touches memory and in general, those are pretty advanced techniques.

To close this post, I would like to take a look at the gains we got. I’m comparing the first version of the code, the last version we had on the previous post and the unrolled version for both branchy and branchless with 8 operations at once and memory prefetching.

| Method | N | Mean | Error | StdDev | Ratio | RatioSD | Code Size |

|---|---|---|---|---|---|---|---|

| FilterCmp | 23 | 277.3 ns | 0.69 ns | 0.64 ns | 1.00 | 0.00 | 411 B |

| FilterCmp_NoRangeCheck | 23 | 270.7 ns | 0.42 ns | 0.38 ns | 0.98 | 0.00 | 397 B |

| FilterCmp_Unroll_8 | 23 | 257.6 ns | 1.45 ns | 1.21 ns | 0.93 | 0.00 | 672 B |

| FilterCmp_Unroll_8_Branchless | 23 | 259.9 ns | 1.96 ns | 1.84 ns | 0.94 | 0.01 | 682 B |

| FilterCmp | 1047 | 754.3 ns | 1.38 ns | 1.22 ns | 1.00 | 0.00 | 411 B |

| FilterCmp_NoRangeCheck | 1047 | 749.0 ns | 1.81 ns | 1.69 ns | 0.99 | 0.00 | 397 B |

| FilterCmp_Unroll_8 | 1047 | 647.2 ns | 2.23 ns | 2.09 ns | 0.86 | 0.00 | 672 B |

| FilterCmp_Unroll_8_Branchless | 1047 | 721.2 ns | 1.23 ns | 1.09 ns | 0.96 | 0.00 | 682 B |

| FilterCmp | 1048599 | 499,675.6 ns | 2,639.97 ns | 2,469.43 ns | 1.00 | 0.00 | 411 B |

| FilterCmp_NoRangeCheck | 1048599 | 494,388.4 ns | 600.46 ns | 532.29 ns | 0.99 | 0.01 | 397 B |

| FilterCmp_Unroll_8 | 1048599 | 426,940.7 ns | 1,858.57 ns | 1,551.99 ns | 0.85 | 0.01 | 672 B |

| FilterCmp_Unroll_8_Branchless | 1048599 | 483,940.8 ns | 517.14 ns | 458.43 ns | 0.97 | 0.00 | 682 B |

| FilterCmp | 33554455 | 30,282,334.8 ns | 599,306.15 ns | 531,269.30 ns | 1.00 | 0.00 | 411 B |

| FilterCmp_NoRangeCheck | 33554455 | 29,410,612.5 ns | 29,583.56 ns | 24,703.61 ns | 0.97 | 0.02 | 397 B |

| FilterCmp_Unroll_8 | 33554455 | 29,102,708.3 ns | 42,824.78 ns | 40,058.32 ns | 0.96 | 0.02 | 672 B |

| FilterCmp_Unroll_8_Branchless | 33554455 | 29,761,841.1 ns | 48,108.03 ns | 42,646.51 ns | 0.98 | 0.02 | 682 B |

The unrolled 8 version is the winner by far, in this scenario (0.5% negatives). Since that is the scenario we have in the real code, that is what I’m focusing on.

Is there anything left to do here?

My next step is to explore whether using vector instructions will be a good option for us.