Recently I am finding myself writing more and more infrastructure level code. Now, there are several reasons for that, mostly because the architectural approaches that I advocate don’t have a good enough infrastructure in the environment that I usually work with.

Writing infrastructure is both fun & annoying. It is fun because usually you don’t have business rules to deal with, it is annoying because it take time to get it to do something that will give the business some real value.

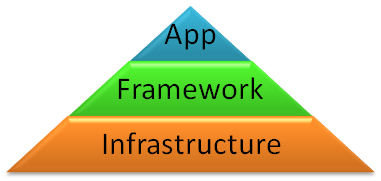

That said, there are some significant differences between writing application level code and infrastructure level code. For that matter, I usually think about this as:

Infrastructure code is usually the base, it provides basic services such as communication, storage, thread management, etc. It should also provide strong guarantees regarding what it is doing, it should be simple, understandable and provide the hooks to understand what happens when things go wrong.

Framework code is sitting on top of the infrastructure, and provide easy to use semantics on top of that. They usually take away some of the options that the infrastructure give you in order to present a more focused solution for a particular scenario.

App code is even more specific than that, making use of the underlying framework to deal with much of the complexities that we have to deal with.

Writing application code is easy, it is a single purpose piece of code. Writing framework and infrastructure code is harder, they have much more applicability.

So far, I don’t believe that I said anything new.

What is important to understand is that practices that works for application level code does not necessarily work for infrastructure code. A good example would be this nasty bit of work. It doesn’t read very well, and it has some really long methods, and… it handle a lot of important infrastructure concerns that you have to deal with. For example, it is completely async, has good error handling and reporting and it has absolutely no knowledge about what exactly it is doing. That is left for higher level pieces of the code. Trying to apply application code level of practices to that will not really work, different constraints and different requirements.

By the same token, testing such code follow a different pattern than testing application level code. Tests are often more complex, requiring more behavior in the test to reproduce real world scenarios. And the tests can rarely be isolated bits, they usually have to include significant pieces of the infrastructure. And what they test can be complex enough as well.

Different constraints and different requirements.