Optimizing read transaction startup timeThe low hanging fruit

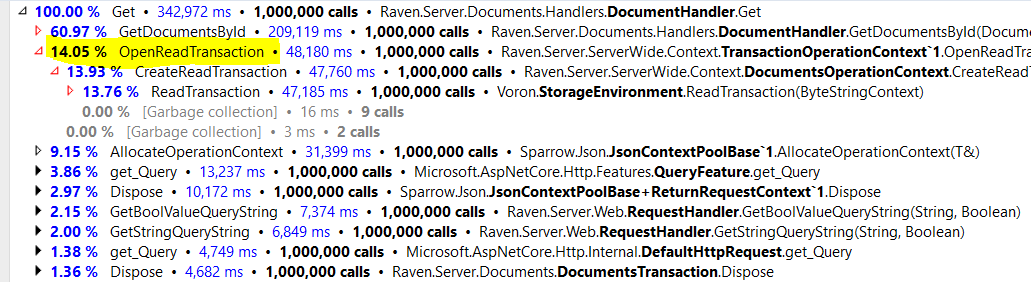

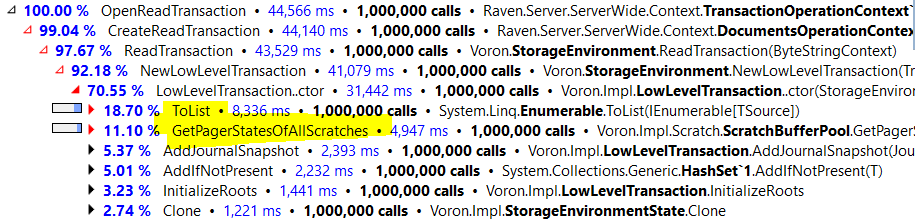

The benchmark in question deals with service 1 million random documents, as fast as possible. In my previous post, I detailed how we were able to reduce the cost of finding the right database to about 1.25% of the cost of the request (down from about 7% – 8%). In this post, we are going to tackle the next problem:

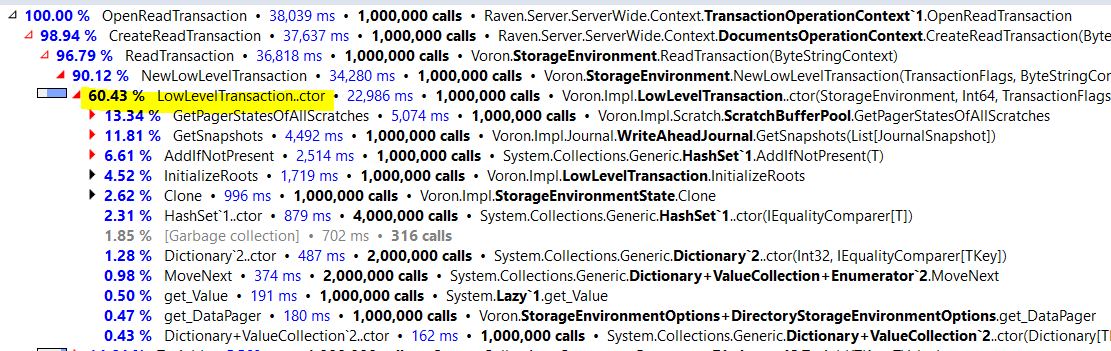

So 14% of the request times goes into just opening a transaction?! Well, that make sense, surely that means that there is a lot going on there, some I/O, probably. The fact that we can do that in less than 50 micro seconds is pretty awesome, right?

Not quite, let us look at what is really costing us here.

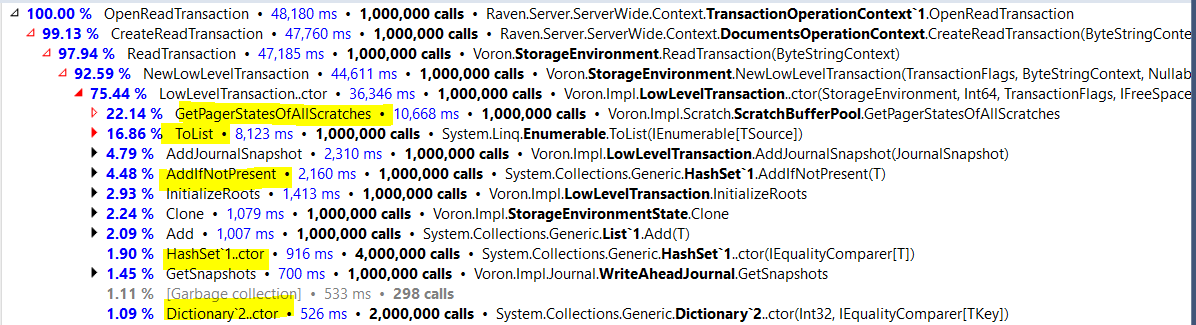

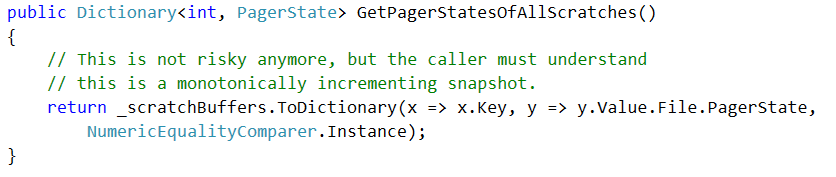

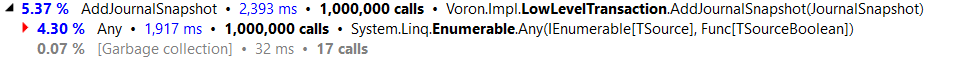

Just look at all of those costs, all of this isn’t stuff that you just have to deal with because there is I/O involved, let us look at the GetPagerStatesOfAllScratches, shall we?

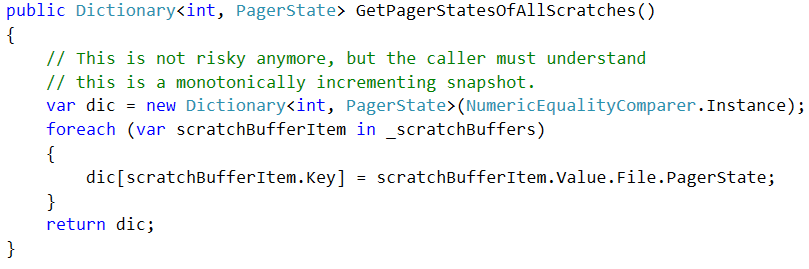

I’ll just sit down and cry now, if you don’t mind. Here is what happens when you take this code and remove the Linqness.

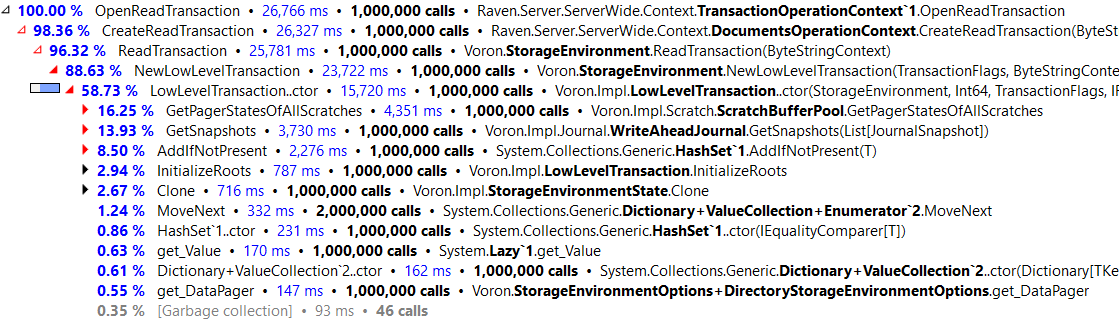

This is purely a mechanical transformation, we have done nothing to really change things. And here is the result:

Just this change reduced the cost of this method call by over 100%! As this is now no longer our top rated functions, we’ll look at the ToList now.

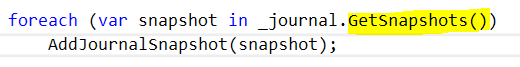

This method is called from here:

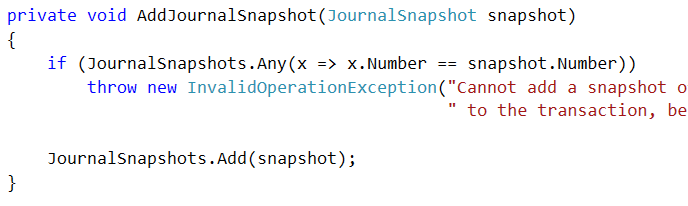

And here is the implementation:

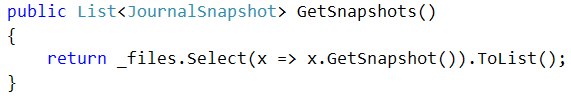

And then we have:

Well, at least my job is going to be very easy here. The interesting thing is that the Any() call can be removed / moved to DEBUG only. I changed the code to pass the JournalSnapshots into the GetSnapshots, saving us an allocation and all those Any calls. That gave us:

So far, we have managed to reduce ten seconds out of the cost of opening a transaction. We have done this not by being smart of doing anything complex. We just looked at the code and fixed the obvious performance problems in it.

Let’s see how far that can take us, shall we?

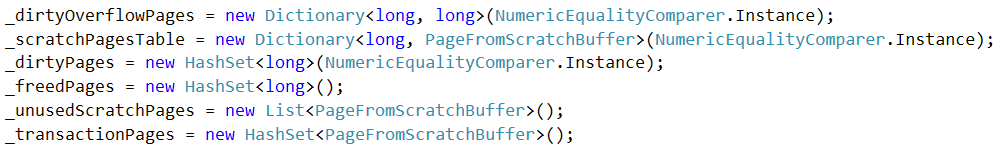

The next observation I had was that Transaction is actually used for both read and write operations. And that there is quite a bit of state in the Transaction that is only used for writes. However, this is actually a benchmark measuring pure read speed, so why should we be paying all of those costs needlessly? I basically moved all the field initializers to the constructor, and only initialize them if we are using a write transaction. Just to give you some idea, here is what I moved:

So those are six non trivial allocations that have been moved from the hot path, and a bunch of memory we won’t need to collect. As for the impact?

We are down to about half of our initial cost! And we haven’t really done anything serious yet. This is quite an awesome achievement, but we are not done. In my next post, we’ll actually move to utilizing our knowledge of the code to make more major changes in order to increase overall performance.

More posts in "Optimizing read transaction startup time" series:

- (31 Oct 2016) Racy data structures

- (28 Oct 2016) Every little bit helps, a LOT

- (26 Oct 2016) The performance triage

- (25 Oct 2016) Unicode ate my perf and all I got was

- (24 Oct 2016) Don’t ignore the context

- (21 Oct 2016) Getting frisky

- (20 Oct 2016) The low hanging fruit

Comments

In GetPagerStatesOfAllScratches(), isn't another low hanging fruit to construct the dictionary with capacity of the count in _ScratchBuffers? Ditto with GetSnapshots() where you can unlinqify to initialize list capacity, or even better is to return an array if you don't need to grow the result like for read transactions. Finally, you're right about the Any() call of AddJournalSnapshot() but I personally think it should be wrapped in a System.Diagnostics.Contracts.Contract.Assume() rather than removed.

Philippecp, This is just the start of a pretty long series of post. Note that setting initial capacity helps, but not by that much, compared to what we ended up doing. And we didn't remove the Any call completely, we implemented that efficiently and put that behind an #if DEBUG

I still think contracts is the right tool for this since it's more explicit and is also removed from release builds by default. Otherwise, extracting that code in a method marked with the ConditionalAttribute does the same thing but is, imho, cleaner than #if Debug. I know this is kinda off topic though and I'm looking forward to next post!

Out of curiosity, are the changes in this series targeting ravendb 3.5 or 4.0? Also, any ETA on 4.0 preview builds and/or making 4.0 publicly visible on github?

Philippecp, This series is about 4.0.

v4.0 is publicly visible on github right now, see: https://github.com/ravendb/ravendb/tree/v4.0

With each Raven 4.0 optimization post, I'm getting happier and happier. 4.0 is going to rock!

Interesting (and kind of sad?) to see the impact of simply removing some LINQ-iness.

Microsoft's Joe Duffy has written/spoken about future C# compiler optimizations that will reduce allocations and turn LINQ-y code into procedural loops with fewer allocations where possible. From the looks of this post, such optimizations could help hot paths significantly.

Do you know why the ToDictionary call was so slow before you inlined it? The two lambdas don't access their closure, so I would expect the C# compiler to preallocate them as static fields.

Benjamin, I assume that it is because it needs 2 delegate invocations per value + the dictionary overhead.

Comment preview