Optimizing read transaction startup timeUnicode ate my perf and all I got was

As an aside, I wonder how much stuff will break because the title of this post has in it.

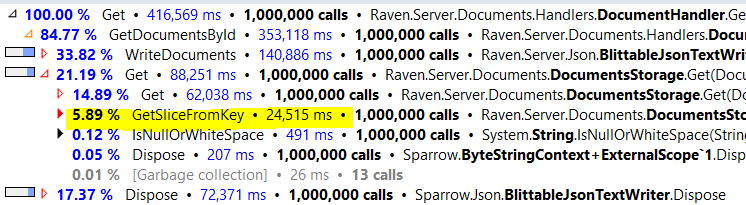

The topic of this post is the following profiler output. GetSliceFromKey takes over 6% of our performance, or about 24.5 seconds out of the total run. That kinda sucks.

What is the purpose of this function? Well, RavenDB’s document ids are case insensitive, so we need to convert the key to lower case and then do a search on our internal index. That has quite a big cost associated with it.

And yes, we are aware of the pain. Luckily, we are already working with highly optimized codebase, so we aren’t seeing this function dominate our costs, but still…

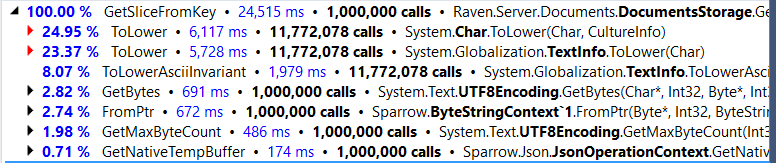

Here is the breakdown of this code:

As you can see, over 60% of this function is spent in just converting to lower case, which sucks. Now, we have some additional knowledge about this function. For the vast majority of cases, we know that this function will handle only ASCII characters, and that Unicode document ids are possible, but relatively rare. We can utilize this knowledge to optimize this method. Here is what this will look like, in code:

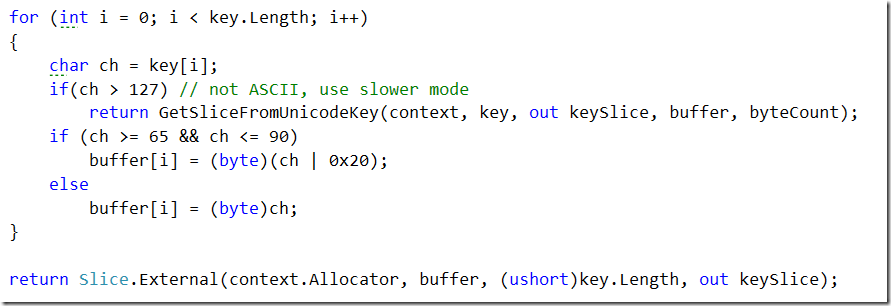

Basically, we scan through the string, and if there is a character whose value is over 127 we fall to the slower method (slower being relative, that is still a string conversion in less than 25 μs).

Then we just find if a character is in the upper case range and convert it to lower case (ASCII bit analysis is funny, it was intentionally designed to be used with bit masking, and all sort of tricks are possible there) and store it in the buffer, or just store the original value.

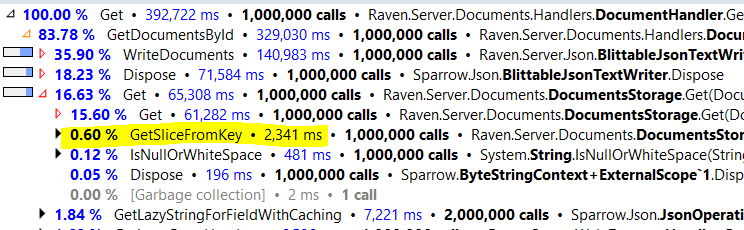

The result?

This method went from taking 5.89% to taking just 0.6%, and we saved over 22 seconds(!) in the run. Again, this is under the profiler, and the code is heavily multi threaded. In practice, this means that the run took 3 seconds less.

Either way, we are still doing pretty good, and we don’t have that pile of poo, so I we’re good.

More posts in "Optimizing read transaction startup time" series:

- (31 Oct 2016) Racy data structures

- (28 Oct 2016) Every little bit helps, a LOT

- (26 Oct 2016) The performance triage

- (25 Oct 2016) Unicode ate my perf and all I got was

- (24 Oct 2016) Don’t ignore the context

- (21 Oct 2016) Getting frisky

- (20 Oct 2016) The low hanging fruit

Comments

... you can have pile of poo when processing bytes... http://www.fileformat.info/info/unicode/char/1f4a9/index.htm ;)

What's the performance impact when the string is primarily non-ASCII characters? Because I imagine that, while the percentage of keys in all RavenDB databases that are non-ASCII is relatively small, some people will have tons.

Maybe the team has already thought about this but why not just store document id's in lower case? If you want to preserve user's case of document id's can use another field; maybe that would take too much time to swap out on returning doc or possibly too much space. Just curious on design decision.

I can only see two ordinary spaces (U+0020) between "has" and "in". And I don't see anything special in the title either.

Is it because whatever symbol was there broke the blog, or am I missing something?

dhasenan, It basically reverts back to the old version. That has a higher cost, but not that much. Note that this is for benchmarking work, and if you aren't running tens of thousands of requests a second, it is meaningless for you

Paul, The document id is stored in lower case, and we keep the original case, just in case :-) The problem is that we get an id in unknown case, and need to lower it so we can search

Svick, Yes, there was supposed to be the Pile of Poo unicode there.

Would it provide a further, but small, improvement to send the value of i to GetSliceFromKey () so that it would know there is no Unicode before the ith character ?

David, Yes, it would, but I think that the value of it would be too small to matter in most cases

Comment preview