Optimizing read transaction startup timeGetting frisky

As in the last post, I’m focusing on reducing the startup time for transactions. In the last post, we focused on structural changes (removing Linq usage, avoiding O(N^2) operations) and we were able to reduce our cost by close to 50%.

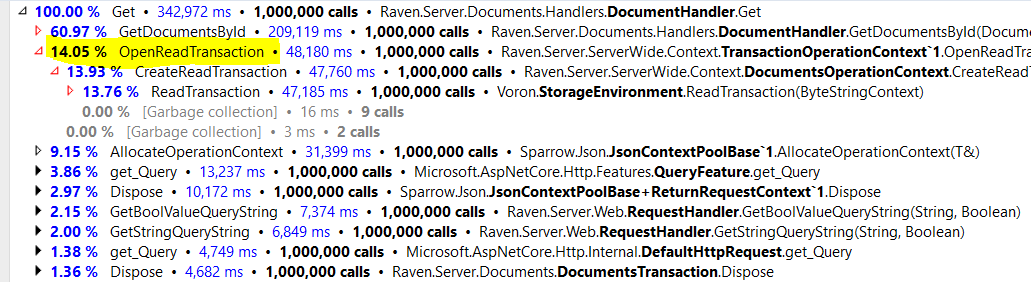

As a reminder, this is what we started with:

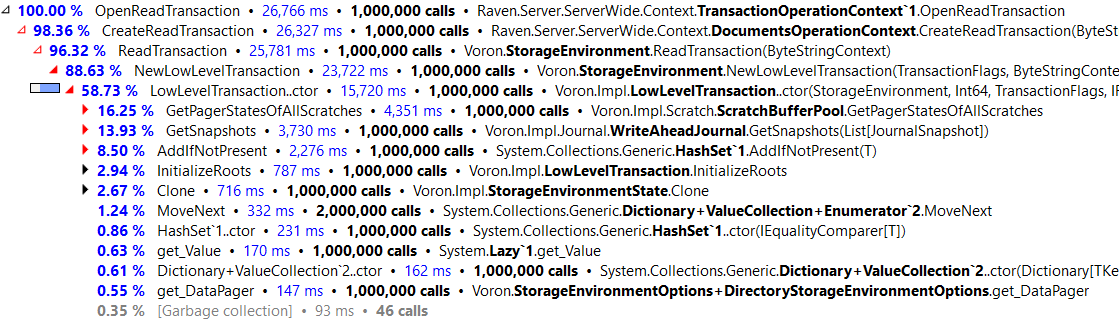

And this is where we stopped on the last post:

Now, I can see that we spend quite a bit of time in the AddifNotPresent method of the HashSet. Since we previously removed any calls to write only transactional state, this means that we have something in the transaction that uses a HashSet and in this scenario, adds just one item to it. Inspecting the code showed us that this was the PagerStates variables.

Transactions need to hold the PagerState so they can ensure that the pagers know when the transaction starts and ends. And we do that by calling AddRef / Release on that at the appropriate times. The nice thing about this is that we don’t actually care if we hold the same PagerState multiple times. As long as we called the same number of AddRef / Release, we are good. Therefor, we can just drop the HashSet in favor of a regular list, which gives us:

So that is about a second and a half we just saved in this benchmark.But note that we still spend quite a bit of time on the List.Add method, looking deeper into this, we can see that all of this time is spent here:

So the first Add() requires an allocation, which is expensive.

I decided to benchmark two different approaches to solving this. The first is to just define an initial capacity of 2, which should be enough to cover most common scenarios. This resulted in the following:

So specifying the capacity upfront had a pretty major impact on our performance, dropping it by another full second. The next thing I decided to try was to see if a linked list would be even better. This is typically very small, and the only iteration we do on it is during disposal, anyway (and it is very common to have just one or two of those).

That said, I’m not sure that we can beat the List performance when we have specified the size upfront. A LinkedList.Add() requires allocation, after all, and a List.Add just sets a value.

So… nope, we won’t be using this optimization.

Now, let us focus back on the real heavy weights in this scenario. The GetPageStatesOfallScratches and GetSnapshots. Together they take about 36% of the total cost of this scenario, and that is just stupidly expensive. Here we can utilize our knowledge of the code and realize that those values can only ever be changed by a write transaction, and they are never changed . That gives us an excellent opportunity to do some caching.

Here is what this looks like when we move the responsibility of creating the pager states of all scratches to the write transaction:

Now let us do the same for GetSnapShots()… which give us this:

As a reminder, LowLevelTransaction.ctor started out with 36.3 seconds in this benchmark, now we are talking about 6.6. So we reduced the performance cost by over 82%.

And the cost of a single such call is down to 7 microsecond under the profiler.

That said, the cost of OpenReadTransaction started out at 48.1 seconds, and we dropped it to 17.6 seconds. So we had a 63% reduction in cost, but it looks like we now have more interesting things to look at than the LowLevelTransaction constructor…

The first thing to notice is that EnsurePagerStateReference ends up calling _pagerStates.Add(), and it suffers from the same issue of cost because of it needs to increase the capacity.

Increasing the initial capacity has resulted in measurable gain.

With that, we can move on to analyze the rest of the costs. We can see that the TryAdd on the ConcurrentDictionary is really expensive*.

* For a given value of really ![]() It takes just under 3 microseconds to complete, but that is still a big chunk of what we can do here.

It takes just under 3 microseconds to complete, but that is still a big chunk of what we can do here.

The reason we need this call is that we need to track the active transactions. This is done because we need to know who is the oldest running transaction for MVCC purposes. The easiest thing to do there was to throw that in a concurrency dictionary, but that is expensive for those kind of workloads. I have switch it up with a dedicated class, that allows us to do better optimizations around it.

The design we ended up going with is a bit complex (more after the profiler output), but it gave us this:

So we are just over a third of the cost of the concurrent dictionary. And we did that using a dedicated array per thread, so we don’t have contention. The problem is that we can’t just do that, we need to read all of those values, and we might be closing a transaction from a different thread. Because of that, we split the logic up. We have an array per each thread that contains a wrapper class, and we give the transaction access to that wrapper class instance. So when it is disposed, it will clear the value in the wrapper class.

Then we can reuse that instance later in the original thread once the memory write has reached the original thread. And until then, we’ll just have a stale read on that value and ignore it. It is more complex, and took a bit of time to get right, but the performance justify it.

Current status is that we started at 48.1 seconds for this benchmark, and now we are at 14.7 seconds for the OpenReadTransaction. That is a good day’s work.

More posts in "Optimizing read transaction startup time" series:

- (31 Oct 2016) Racy data structures

- (28 Oct 2016) Every little bit helps, a LOT

- (26 Oct 2016) The performance triage

- (25 Oct 2016) Unicode ate my perf and all I got was

- (24 Oct 2016) Don’t ignore the context

- (21 Oct 2016) Getting frisky

- (20 Oct 2016) The low hanging fruit

Comments

Comment preview