Optimizing read transaction startup timeRacy data structures

Finding the appropriate image for this post was hard, you try searching for “racy pictures” in Google Image Search, but you might not want to do it from work ![]() .

.

Anyway, today at lunch we had a discussion about abstractions and at what level you should be working. The talk centered about the difference between working in low level C and working with a high level framework like C# and the relative productivity associated with it.

At one point the following argument was raised: “Well, consider the fact that you never need to implement List, for example”. To which my reaction was: “I did just that last week”.

Now, to forestall the nitpickers, pretty much any C developer will have an existing library of common data structures already in place, I know. And no, you shouldn’t be implementing basic data structures unless you have a really good reason.

In my case, I think I did. The issue is very simple. I need to have a collection of items that are safe for multi threaded reads, but they are mostly only ever accessed from a single thread, and are only ever modified by a single thread. Oh, and they are also extremely performance sensitive.

The reason we started looking into replacing them is that the concurrent data structures that we were using (ConcurrentDictionary & ConcurrentStack, in those cases) were too expensive. And a large part of that was because they gave us a lot more than what we actually needed (fully concurrent access).

So, how do we build a simple list that allow for the following:

- Only one thread can write.

- Multiple threads can read.

- No synchronization on either end.

- Stale reads allowed.

The key part here is the fact that we allow stale reads.

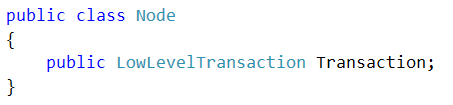

Here is the concrete scenario, we need to track all active transactions. A transaction is single threaded, but we allow thread hopping (because of async). So we define:

And then we have:

DynamicArray is just a holder for an array of Nodes. Whenever we need to add an item to the active transactions, we’ll get the local thread value, and do a linear search through the array. If we find a node that has a null Transaction value, we’ll use it. Otherwise, we’ll add a new Node value to the end of the array. If we run out of room in the array, we’ll double the array size. All pretty standard stuff, so far. Removing a value from the array is also simple, all you need to do is to null the Transaction field on the relevant node.

Why all of this?

Well, only a single thread can ever register a transaction for a particular DynamicArray instance. That means that we don’t have to worry about concurrency here. However, we do need to worry about transactions that need to remove themselves from the list from other threads. That is why we don’t have any concurrency control here. Instead, removing the transaction is done by setting the node’s Transaction field to null. Since only the owning transaction can do that, this is safe.

Other threads, however, need to read this information. They do that by scanning through all the thread values, and then accessing the DynamicArray directly. Now, that means that we need to be safe for concurrent reading. This is done by having the array more or less static on most scenarios. After it get full enough, it will never grow again, and the values will remain there, so effectively other threads will be reading an array of Nodes. We do need to be careful when we expand the array to give more room. We do this by first creating the new array, copying the values to the new array, and only then setting it in the threaded instance.

This way, concurrent code may either see the old array or the new one, but never need to traverse both. And when traversing, it goes through the nodes and check their Transaction value.

Remember that the Transaction is only being set from the original thread, but can be reset from another thread if the transaction moved between threads. We don’t really care, the way it works, we read the node’s transaction field, and then check its value (once we have a stable reference). The idea is that we don’t worry about data races. The worst that can happen is that we’ll see an older view of the data, which is perfectly fine for our purposes.

This is pretty complex, but the code itself is simple enough, and the performance benefit justify it several times over.

More posts in "Optimizing read transaction startup time" series:

- (31 Oct 2016) Racy data structures

- (28 Oct 2016) Every little bit helps, a LOT

- (26 Oct 2016) The performance triage

- (25 Oct 2016) Unicode ate my perf and all I got was

- (24 Oct 2016) Don’t ignore the context

- (21 Oct 2016) Getting frisky

- (20 Oct 2016) The low hanging fruit

Comments

I don't understand how this works. It seems contradictory to me. The thread that starts a transaction adds it to its thread local stored _activeTransactions, but since it cannot access to other threads thread storage, how _activeTransactions beloging to other threads are updated?

Jesus, The active transaction for other threads isn't updated. ThreadLocal has an way to access all the thread local data, so you can scan the local data from all threads

I didn't know .net 4.5 introduced ThreadLocal.Values property

I think there must be something missing from this post about the functionality of DynamicArray because it's not clear to me what advantage it has over a simple List<T>. The only mention of its capabilities is this:

List<T> already does this as you can see in List.Capacity. If anything it should be marginally faster because you don't have to set the ThreadLocal value when you grow capacity because it's always the same List<T> instance.

philippecp, Yes, it does, but it doesn't allow us to read from it while it is doing it. The DynamicArray ensures that at all times, a reader may:

List<T> doesn't ensure that this is valid

Comment preview