Optimizing read transaction startup timeDon’t ignore the context

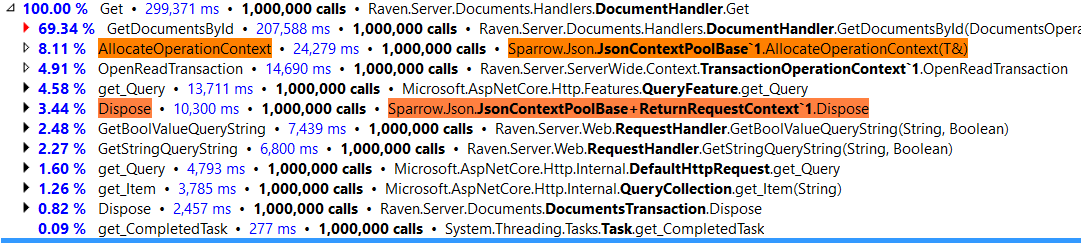

We focused on opening the transaction, but we also have the context management to deal with, in this case, we have:

And you can see that it cost us over 11.5% of the total request time. When we started this optimization phase, by the way, it took about 14% of the request time, so along the way our previous optimizations has also helped us to reduce it from 42 μs to just 35 μs.

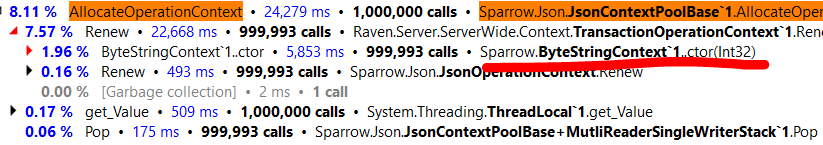

Drilling into this, it become clear what is going on:

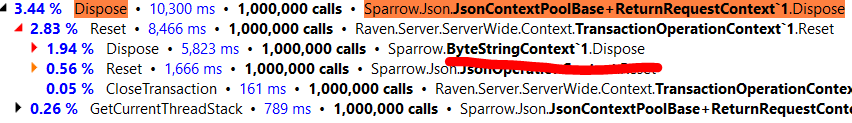

The problem is that on each context release we will dispose the ByteStringContext, and on each operation allocation we’ll create a new one. That was done as part of performance work aimed at reducing memory utilization, and it looks like we were too aggressive there. We do want to keep those ByteStringContext around if we are going to immediately use them, after all.

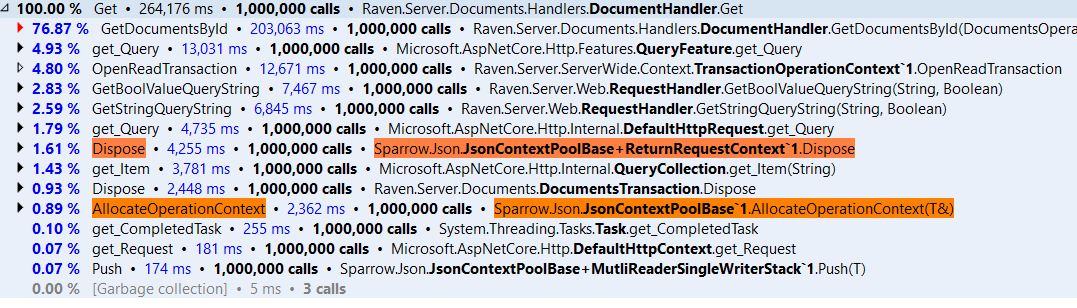

I changed it so the actual disposal will only happen when the context is using fragmented memory, and the results were nice.

This is a much nicer profiler output to look at, to be frank. Overall, we took the random reads scenario and moved it from million reads in 342 seconds (under the profiler) to 264 seconds (under the profiler). Note that those times are cumulative, since we run that in multiple threads, the actual clock time for this (in the profiler) is just over a minute.

And that just leave us with the big silent doozy of GetDocumentsById, which now takes a whooping 77% of the request time.

More posts in "Optimizing read transaction startup time" series:

- (31 Oct 2016) Racy data structures

- (28 Oct 2016) Every little bit helps, a LOT

- (26 Oct 2016) The performance triage

- (25 Oct 2016) Unicode ate my perf and all I got was

- (24 Oct 2016) Don’t ignore the context

- (21 Oct 2016) Getting frisky

- (20 Oct 2016) The low hanging fruit

Comments

Comment preview